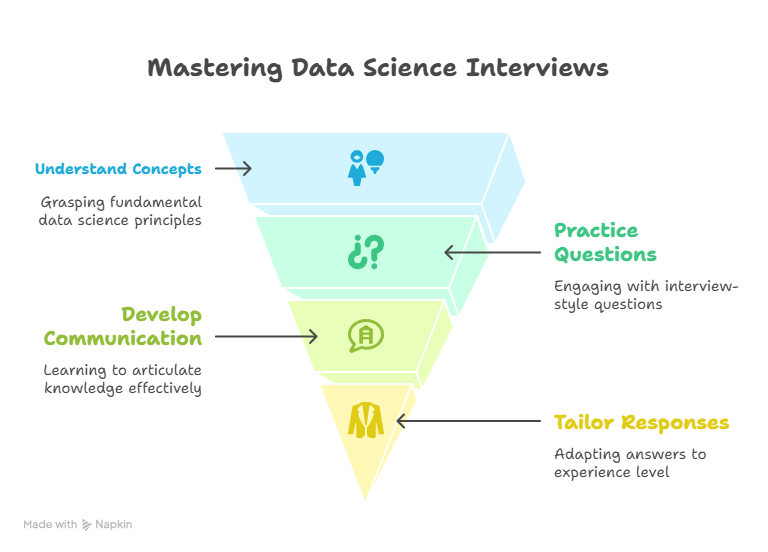

Data science is one of those fields that sounds cool on paper, with concepts like big data, machine learning, and AI, but once you reach the interview stage, it can feel like you’re suddenly being asked to solve a Rubik’s Cube blindfolded.

It’s not just about knowing stuff. It’s about learning how to communicate what you know. And that’s where most candidates mess up—not because they aren’t skilled, but because they don’t present their knowledge the right way.

This guide? It’s here to help you avoid that.

Whether you’re just starting out or already swimming in Python scripts and neural nets, these 40 data science interview questions are tailored to your level. Each one comes with a conversational explanation that you can use in an interview, not just textbook definitions. Let’s dive in.

Understanding What Companies Expect at Different Levels

Before we get to the questions, let’s clear this up:

If you’re a beginner (0–2 years), they expect:

- You know your basics: probability, Python, statistics.

- You’re clear on ML types and standard models.

- You can write clean code and manipulate data.

If you’re intermediate (2–5 years), they want:

- Fluency in algorithms, optimisation, and cross-validation.

- Ability to explain trade-offs and metrics.

- Business context awareness: not just “how,” but why.

If you’re experienced (5+ years):

- You should think in systems and strategies.

- You’ve led teams or owned projects.

- You tie ML to business outcomes, with impact.

15 Beginner-Level Data Science Interview Questions

Whether you’re applying for your first data science internship or a junior analyst role, most interviewers are less interested in whether you’ve memorised buzzwords and more curious if you understand the basics well enough to work with real data.

Here’s how to ace those “easy” questions that are surprisingly tricky when put on the spot.

1. What’s the difference between population and sample?

This one’s deceptively simple.

- A population includes every single entity you’re interested in studying.

- A sample is a smaller group chosen to represent the population.

Let’s say you’re analysing customer data for Amazon. The population could be all Amazon users worldwide. You can’t possibly analyse each of them individually, so you take a sample—say, 10,000 randomly selected customers.

Knowing how to sample well helps avoid bias and ensures that your conclusions can be generalised.

2. Why is sampling important?

Because working with massive datasets is expensive and time-consuming, sampling gives you a shortcut without losing too much accuracy—if you do it right.

3. Explain the Central Limit Theorem (CLT)

In simple terms: if you take lots of samples from any distribution (even a wonky one), the means of those samples will form a normal distribution, especially as the sample size increases.

This is why we can trust confidence intervals and hypothesis tests—even if the underlying data isn’t perfectly normal.

4. What’s the difference between correlation and causation?

This one often trips people up.

- Correlation means two variables move together.

- Causation means one causes the other.

Interviewers love real-world analogies here. Bring one if you can.

5. What are Type I and Type II errors?

- Type I error (false positive): You wrongly reject a true null hypothesis.

You say a drug works when it doesn’t. - Type II error (false negative): You fail to reject a false null hypothesis.

You say a drug doesn’t work when it actually does.

These trade-offs matter in high-stakes industries like healthcare and finance. A great follow-up is: “Which one is worse depends on the scenario.”

6. What’s the difference between supervised and unsupervised learning?

This is your bread and butter.

- Supervised learning = labelled data. You’re teaching the model using known input-output pairs.

Example: Spam vs. non-spam emails. - Unsupervised learning = no labels. The model finds patterns by itself.

Example: Grouping customers by behaviour.

7. What is overfitting and underfitting?

- Overfitting: Your model is too complex. It performs excellent on training data but poorly on new data.

- Underfitting: Your model is too simple. It misses key patterns, even in training data.

Analogy: Think of overfitting like memorising your textbook word for word. Underfitting is akin to reading only the chapter titles. Neither helps on exam day.

8. What is cross-validation, and why is it useful?

Cross-validation helps you evaluate a model’s performance reliably by splitting your data into parts. You train on some parts and test on the rest, rotating the roles.

K-fold cross-validation (usually with k=5 or 10) is common.

Interview gold: “It helps prevent overfitting and gives a more honest view of how the model might perform in real life.”

Read more about K fold cross validation here

9. What is the bias-variance tradeoff?

This concept defines why models succeed or fail.

- Bias = error from wrong assumptions (underfitting).

- Variance = error from model sensitivity (overfitting).

A high-bias model is rigid. A high-variance model is chaotic. Your goal? Find the sweet spot.

10. Difference between classification and regression?

- Classification predicts categories (e.g., email = spam or not).

- Regression predicts continuous values (e.g., price of a house).

Interviewers might ask you to switch between the two on the spot:

“If we convert house price ranges into categories like low, medium, high—what kind of model is that?”

Now you’re thinking like a data scientist.

11. Why use Pandas over Python lists?

Pandas = powerful, faster, intuitive. Lists are… lists.

Pandas lets you slice, filter, group, and aggregate your data like a pro. You’ll rarely find real-world data science projects that don’t use it.

12. Write a Python function for the mean and standard deviation.

Be prepared to write simple code—even if it’s in a Google Meet chat box.

Also, be ready to explain why np.std() by default gives population standard deviation (use ddof=1 for sample).

13. SQL INNER JOIN vs LEFT JOIN?

SQL knowledge is non-negotiable—even for machine learning jobs.

- INNER JOIN: Only matching rows.

- LEFT JOIN: Keep all rows from the left table, match where possible.

Visuals help here. Draw two circles like a Venn diagram if you’re on a whiteboard.

14. How to handle missing values in a dataset?

It depends. And that’s what the interviewer wants to hear.

You might:

- Drop them (if they’re few).

- Impute with mean/median/mode.

- Predict them with models.

The key is to justify your choice based on why the values are missing: MCAR, MAR, or MNAR.

15. What is data normalisation/standardisation?

Both techniques scale your features, especially important for algorithms like KNN or logistic regression.

- Normalisation: Rescales to [0, 1].

- Standardisation: Rescales to mean = 0, std = 1.

15 Intermediate-Level Data Science Interview Questions

At this stage in your career, interviewers want to see how well you can connect technical knowledge with business value. You’re not just crunching numbers—you’re solving problems. Let’s dig deeper into each question.

16. How does Random Forest work, and why is it better than a single decision tree?

A single decision tree can be super sensitive to noisy data—it’s like listening to just one person in a crowd. What if they’re wrong?

Random Forest is like asking hundreds of people (trees) and taking the majority vote. It builds those trees using random subsets of data and features, which reduces overfitting.

17. Bagging vs Boosting – Which one and when?

It boils down to error type:

- Bagging: reduces variance → helps when the model is overfitting.

- Boosting: reduces bias → helps when the model is underfitting.

Boosting (such as XGBoost, LightGBM) is widely used in Kaggle competitions, but real-world boosting can be slow and may overfit small datasets. So think practically, not just theoretically.

18. How does logistic regression work?

People often say, “It’s for binary classification.” True. But go deeper.

It outputs probabilities by applying a sigmoid to a linear combination of inputs. You interpret coefficients as impact on log-odds, which makes it great for explainability.

If someone says, “Why not use a decision tree instead?” You say: “Trees are great, but they lack interpretability in regulated industries like finance or healthcare.”

19. What are the limitations of K-means clustering?

It’s fast and easy, but assumes:

- Clusters are spherical

- Equal size

- Same density

It also needs you to define k upfront.

Mention the elbow method and silhouette score to find the optimal k. For more complex data, consider alternatives such as DBSCAN or Hierarchical clustering.

20. What is regularization, and why does it matter?

Models can learn noise. Regularization tells them: “Chill. Simpler is better.”

- L1 (Lasso) → drops unimportant features (makes models sparse)

- L2 (Ridge) → shrinks coefficients smoothly

Use case: In high-dimensional datasets (like text or genomics), L1 can automatically reduce clutter and boost generalisation.

21. Precision, Recall, F1: What’s the trade-off?

Don’t just define them—give a scenario.

Example: In fraud detection,

- Precision = fewer false positives (don’t block genuine users)

- Recall = catch-all fraud (even if some users get annoyed)

F1 helps when there’s a class imbalance. Make sure you also mention the confusion matrix to visualise these.

22. ROC and AUC – How do you interpret them?

AUC gives a single number to judge classifier quality.

- 0.5 = random

- 0.7–0.8 = decent

- 0.9+ = great (but too good might mean data leakage!)

23. Handling imbalanced datasets?

Beyond SMOTE, mention:

- Class weighting in models like LogisticRegression(class_weight=‘balanced’)

- Anomaly detection techniques (Isolation Forest, One-Class SVM)

Real-world example? In telecom churn, maybe only 2% customers churn—but those 2% are gold. Catching them is worth millions.

24. What is feature selection, and why does it boost performance?

Too many features = noise, overfitting, and longer training times.

Mention:

- Mutual information

- Chi-square

- Recursive Feature Elimination (RFE)

And always tie back to interpretability—especially in domains where transparency is key.

25. Hyperparameter tuning methods?

- Grid Search: exhaustive but slow.

- Random Search: faster and surprisingly effective.

- Bayesian Optimisation: smarter search based on past results.

Combine with cross-validation to avoid overfitting. Bonus points if you bring up Optuna or Ray Tune—modern tools loved in the industry.

26. How would you build a recommendation system?

Start with: What’s the goal? Netflix wants more watch time. Amazon wants more purchases. Spotify wants you to stick around. So your model needs to optimize for engagement.

There are two core approaches:

- Collaborative filtering – based on user behaviour (e.g., “people who watched X also watched Y”).

- Content-based filtering – based on item attributes (e.g., genre, length, tags).

Now, the kicker is the cold start problem:

- What if a new user signs up? (no history)

- Or a new item is added? (no interactions)

Solutions:

- For new users: Ask for preferences during onboarding.

- For new items, use content-based features (metadata) until interactions accumulate.

Don’t forget matrix factorisation (like SVD) or deep learning recsys models (like embeddings in neural networks) for larger platforms.

27. A company’s conversion rate dropped by 15%. How would you investigate?

This is where your data detective hat comes on. You’re not just a data scientist now—you’re Sherlock Holmes with a dashboard.

Step-by-step:

- Check data quality first. Is this drop even real? Are all events being logged properly?

- Define the funnel: homepage → product → cart → checkout. Where’s the biggest drop-off?

- Segment users: geography, device, time of day, source channel. Did something break on mobile? Is there a new bug in a region?

- Compare against past events: Was a new feature released? Price increase? Marketing campaign ended?

Tools to mention: funnel analysis in tools like Google Analytics, Mixpanel, or SQL queries on event logs.

28. How would you design an A/B test for a new website feature?

This one’s a classic, but many candidates miss the nuance.

Let’s say you want to test a new homepage design. Here’s your plan:

- Define your metric: Click-through rate? Signups? Time on site?

- Set a hypothesis: “New design will increase CTR by 10%.”

- Randomly split users: Ensure equal distribution across geography, devices, and other relevant factors.

- Calculate required sample size: Based on baseline conversion and desired lift. Use tools like Evan Miller’s A/B calculator.

- Monitor duration: Run long enough to account for weekday/weekend behaviour.

- Analyse the results statistically: Look for significance (p < 0.05), but also consider practical relevance.

Additionally, highlight potential pitfalls, including seasonality, early peeking at results, and p-hacking.

29. Explain how you would build a churn prediction model.

Start with: “What does churn mean for the business?”

In telecom, it’s a customer leaving. In SaaS, it’s a subscription cancellation. In mobile apps, it could be 30+ days of inactivity.

Here’s your workflow:

- Define churn clearly.

- Gather behavioural data: logins, session length, transactions, and customer service tickets.

- Create features: time since last activity, frequency, engagement patterns.

- Label the data (churned = 1, retained = 0).

- Model it using logistic regression, decision trees, or XGBoost.

- Evaluate with precision/recall, especially when churn is rare.

The most important part? Interpretation and action. Which features are driving churn? Can marketing intervene with targeted offers or retention campaigns?

30. Why does model interpretability matter in business settings?

Imagine your model says, “User A is going to churn.” Your stakeholder will ask, Why?

Interpretability builds trust. It lets stakeholders act confidently on model outputs. It also helps with:

- Debugging poor performance

- Regulatory compliance (finance, healthcare)

- Ethical AI and fairness audits

Tools to mention:

- SHAP: For feature-level explanations across models.

- LIME: Local, instance-level insights.

- Feature importance: Quick wins with tree models.

Don’t just talk tech—tie it to real-world outcomes. For instance: “In a bank, being able to explain why a customer was denied a loan isn’t just helpful—it’s required by law.”

10 Expert-Level Data Science Interview Questions (Fully Expanded)

At this stage, your interview is less about syntax and more about systems, decisions, and business impact. The bar is higher: Can you think like an architect? A strategist? A leader?

Let’s break it down.

31. Explain how neural networks learn through backpropagation.

At its core, it’s about making better guesses through feedback. Here’s the flow:

- The input passes through layers to make a prediction.

- That prediction is compared to the actual label to calculate error (loss).

- Backpropagation calculates how much each weight contributed to that error using the chain rule of calculus.

- Gradients are computed and weights are updated using gradient descent.

Mention activation functions, learning rate, and the vanishing gradient problem, especially in deeper networks. You can also talk about solutions like ReLU and batch normalization.

32. What are the differences between RNN, LSTM, and GRU?

These are all designed to handle sequential data, like time series, speech, or text.

- RNNs have short-term memory but suffer from the vanishing gradient problem.

- LSTMs introduce memory cells and gates to manage long-term dependencies.

- GRUs are a simplified version of LSTMs—faster, fewer parameters, often equally effective.

Mention practical applications:

- LSTM for language modelling or stock prediction.

- GRU for mobile apps where compute is constrained.

Leadership insight: Show that you understand when to choose what, not just how they work.

33. Explain the concept of transfer learning and its applications.

Instead of training a model from scratch, you take a pre-trained model (like ResNet, BERT) and fine-tune it for your specific task.

Why it matters:

- Saves time and computing.

- Great when labelled data is limited.

- Powerful for domains like medical imaging, sentiment analysis, or voice recognition.

- Real-world application

34. How do GANs (Generative Adversarial Networks) work?

Imagine two models:

- Generator tries to create fake data.

- Discrimination tries to tell the real from the fake.

Mention applications:

- Image generation (e.g., DeepFakes)

- Art and design tools

- Data augmentation (e.g., synthetic medical images)

Challenges: mode collapse, training instability, and evaluation. You can impress by discussing techniques like Wasserstein GANs or progressive growing.

35. How would you design a real-time ML system for fraud detection?

This is where system design meets modeling.

Key components:

- Streaming ingestion – Kafka, Spark Streaming

- Real-time scoring – low-latency API endpoints with a fast model (e.g., logistic regression or decision trees)

- Feature store – for consistent features across training and serving

- Model retraining – handle concept drift with automated retraining pipelines

- Alerting system – threshold tuning, business escalation paths

36. How would you scale a machine learning model to handle millions of predictions per day?

Scalability means thinking beyond notebooks.

Approaches:

- Batch inference with Spark or distributed computing

- Online inference using model-serving frameworks (TensorFlow Serving, TorchServe)

- Use model compression, quantisation, and distillation for faster inference

- Deploy on Kubernetes or use serverless architecture like AWS Lambda

Add monitoring: latency, throughput, error rates, and model drift.

And don’t forget to design for failover and fallback models—because in production, things break.

37. How do you ensure reproducibility in machine learning experiments?

Imagine you built a great model today. Can someone else rebuild it exactly six months later?

Best practices:

- Use Git for code, DVC for data, and MLflow or Weights & Biases to log experiments.

- Containerise your environment with Docker.

- Fix random seeds and document hyperparameters.

Leadership insight: Emphasise the importance of reproducibility in collaboration, auditing, and regulated industries such as finance and healthcare.

38. How would you convince stakeholders to invest in a machine learning project?

Don’t pitch “cool tech.” Pitch outcomes.

Steps:

- Start with a clear business pain point or opportunity.

- Estimate ROI—projected savings, revenue increase, or customer retention.

- Share a mini-proof of concept or baseline numbers.

- Address risks related to data quality, model accuracy, and ethical concerns.

Be honest: “ML isn’t magic, but here’s how it can move the needle.”

39. A junior data scientist reports 95% model accuracy. What would you ask next?

Accuracy is often misleading, especially with imbalanced data.

Ask:

- What’s the baseline accuracy?

- What’s the class distribution?

- How was the data split? Was there any data leakage?

- What are the precision, recall, and F1-scores?

- Was the test data truly unseen?

This shows that you care about validation rigour, not vanity metrics.

40. How do you stay updated with the latest developments in data science?

A great data scientist doesn’t stop learning.

You might say:

“I read arXiv papers weekly, follow top ML researchers on Twitter/X, and experiment with new tools via side projects. I also attend meetups and teach junior team members—it sharpens my thinking and keeps me accountable.”

Want to impress? Mention:

- Reproducing research papers

- Contributing to open source

- Writing LinkedIn/Medium articles to build thought leadership

It’s not about how much you read—it’s how you engage and apply what you learn.

Final Thoughts: How to Use These Questions to Win Interviews

Don’t just memorise answers. Practice explaining them as if you’re talking to a curious friend, clear, concise, and confident. Because that’s the secret: Data science is about solving problems for real people, not showing off equations.

So go in, stay calm, and tell the story behind your answers.