Introduction

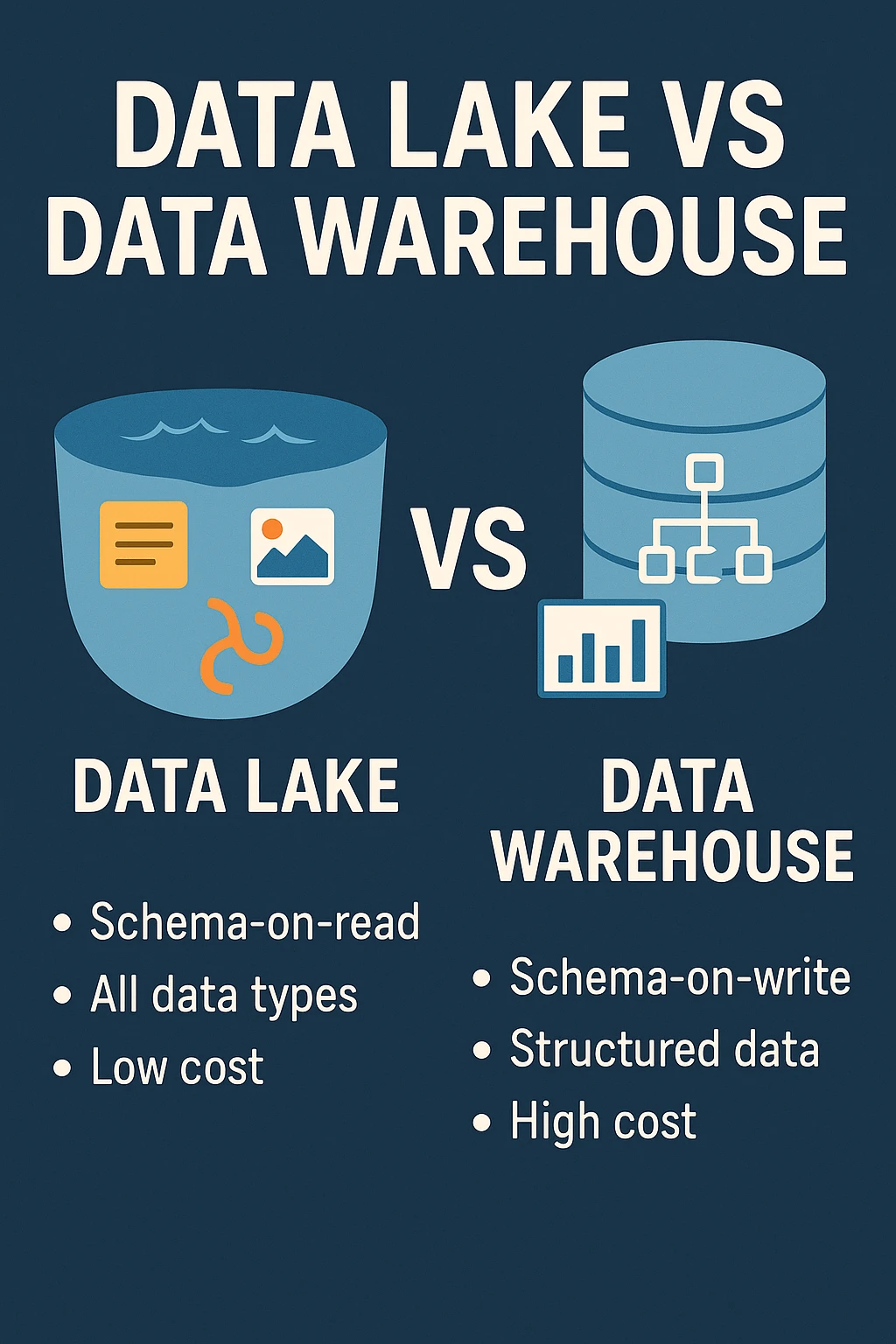

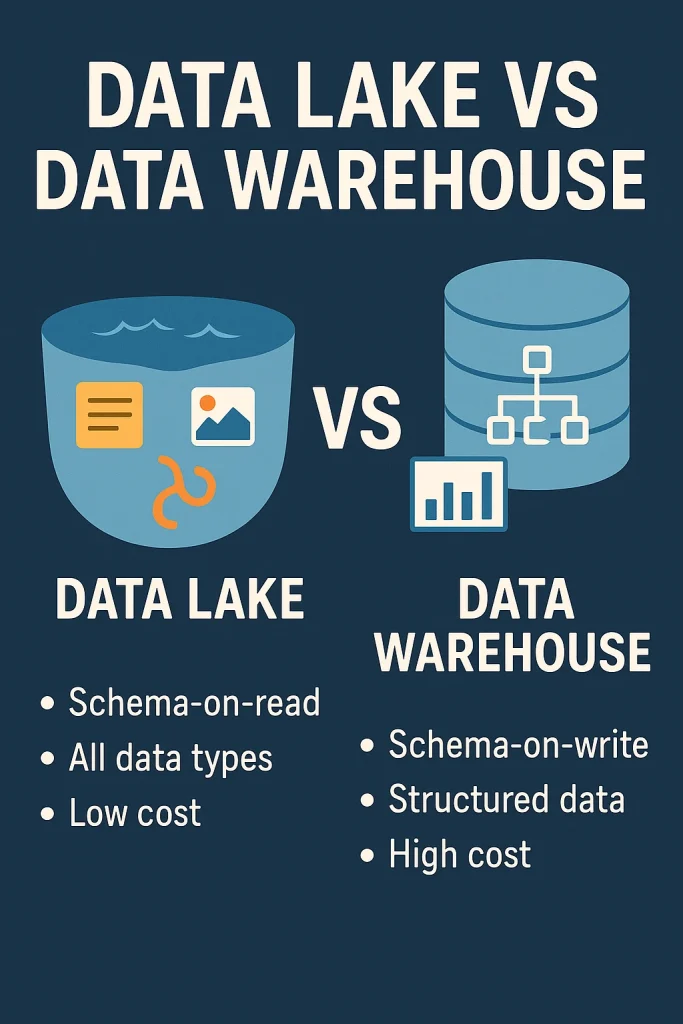

Data Lake vs Data Warehouse has become a central discussion in today’s data-driven world. The digital revolution has reshaped every industry—whether edtech, finance, or tourism—by generating massive amounts of data from sources like tweets, online purchases, and sensor readings. In this landscape, data is no longer just a byproduct; it’s the core of modern business. To stay competitive, companies must master the art of collecting, storing, and analyzing data. This shift has brought two critical data storage approaches to the forefront: Data Lakes and Data Warehouses. Both data lake and data warehouse are integral to modern big data infrastructure.

What Do We Mean by Data Lake?

A Data Lake is a centralised, scalable repository built to store massive volumes of data in its original and unprocessed format. It is used to store all types of data — structured and unstructured — including videos, images, audio, XML files, and more.

Unlike data warehouses, which require data to conform to a predefined schema before ingestion (schema-on-write), data lakes use a schema-on-read approach. This means data is stored as-is and only transformed when accessed for analysis. It’s beneficial in environments where the nature of data is diverse, evolving, and not fully understood.

This concept emerged in response to the Big Data era, where traditional systems struggled to scale cost-effectively. However, challenges such as governance, data quality, and discoverability remain. Without proper management, a ‘data lake’ can quickly devolve into a ‘data swamp’.

Key Features

- Schema-on-Read Architecture: Ingests raw data without a predefined structure.

- Supports All Data Types: Structured (tables), semi-structured (JSON, XML), and unstructured (text, images, audio).

- Real-Time Processing: Supports batch and real-time analysis for use cases like anomaly detection, recommendation engines, and IoT monitoring.

- Scalability: Grows easily using cloud-based platforms like Amazon S3 and Azure Data Lake Storage.

Common Technologies

- Hadoop Distributed File System (HDFS): Foundational storage layer in the Apache Hadoop ecosystem.

- Amazon S3: Scalable object storage widely used with analytic tools like Redshift and Athena.

- Azure Data Lake Storage: Secure and scalable storage supporting frameworks like Hadoop and Spark.

- Delta Lake: Open-source layer developed by Databricks for ACID transactions, data versioning, and schema enforcement.

Best Use Cases

- Big Data analytics

- Data science and machine learning

- Storing raw, high-volume data from diverse sources

Example

A retail company might use a data lake to ingest clickstream data, social media feeds, product images, and sales logs — enabling advanced analytics and AI-driven recommendations.

What is a Data Warehouse?

A Data Warehouse is a centralised, highly organised data management system designed to efficiently store, retrieve, and analyse large amounts of historical data. Unlike data lakes, data warehouses require data to be cleaned and formatted before ingestion.

This structure supports analytics, enabling complex queries, dashboards, and trend analysis. The structured nature ensures compliance, governance, and consistent reporting.

Key Features

- Schema-on-Write: Data must be processed, validated, and transformed before storage.

- Optimised for Analytics: Handles complex SQL queries and large-scale aggregations.

- Data Integrity and Consistency: Maintains accuracy through strict schema and validation rules.

- High Performance: Uses indexing, materialised views, and data partitioning for fast querying.

Popular Tools

- Amazon Redshift

- Google BigQuery

- Snowflake

- Azure Synapse Analytics

Best Use Cases

- Business intelligence dashboards

- Financial and operational reporting

- Customer segmentation

- Regulatory compliance

Example

A bank might use a data warehouse to consolidate transactional data from multiple branches, enabling robust financial reporting and compliance audits.

Data Lake vs Data Warehouse: Core Differences

| Feature | Data Lake | Data Warehouse |

|---|---|---|

| Data Types | Structured, semi-structured, unstructured | Structured |

| Schema | Schema-on-read | Schema-on-write |

| Storage Cost | Low (cloud/commodity storage) | Higher (optimized and proprietary) |

| Performance | Slower for SQL; fast raw data ingest | Fast for SQL queries |

| Data Processing | Batch & real-time | Primarily batch |

| Data Quality | Variable | High (enforced) |

| Use Cases | Data science, ML, exploration | BI, reporting, compliance |

| Technologies | Hadoop, S3, ADLS, Delta Lake | Redshift, BigQuery, Snowflake |

While data lakes offer flexibility and scale, and data warehouses provide structure and performance, a new hybrid approach is emerging. Solutions like Databricks Lakehouse and Snowflake now merge both models, providing the scalability of lakes with the performance of warehouses.

When to Use a Data Lake

Data lakes shine when you need to store and analyse large volumes of raw, unstructured, or semi-structured data. Ideal for:

- IoT Sensor Data

- Clickstream Logs

- Audio, Video, and Image Storage

- Machine Learning and Deep Learning

Advantages

- Scalable to petabytes

- Cost-effective for raw data

- Flexible for experimentation

Limitations

- Lower data quality if not governed

- Not optimised for complex SQL queries

When to Use a Data Warehouse

Data warehouses suit structured data environments requiring accuracy, consistency, and high performance. Ideal for:

- Financial and operational dashboards

- HR analytics

- Customer segmentation

Advantages

- High query performance

- Strong data integrity

- Reliable, standardised reporting

Limitations

- Higher cost

- Less flexibility for unstructured data

Can You Use Both Together? Introducing the Lakehouse

What is a Data Lakehouse?

A Data Lakehouse blends the flexibility of data lakes with the performance and reliability of data warehouses. It stores all data types in a unified platform, supporting both BI and ML workloads.

Key Benefits

- Unified storage

- Schema enforcement

- Real-time analytics

- Reduced data duplication

Technologies

- Databricks Lakehouse Platform

- Delta Lake

- Snowflake (hybrid features)

Ideal For

Organisations that need both experimentation (ML, data science) and reliable reporting (dashboards, compliance).

Real-World Examples

- Netflix: Uses data lakes for real-time behaviour analysis and Delta Lake for unified analytics.

- Airbnb: Combines data lakes for exploration with warehouses for reporting.

- Banks: Use data lakes for fraud detection and warehouses for compliance and audits.

Security and Governance Comparison

| Feature | Data Lake | Data Warehouse | Lakehouse |

|---|---|---|---|

| Security | Evolving (cloud IAM, encryption) | Mature (granular controls, auditing) | Unified |

| Governance | Challenging without metadata | Strong (metadata, lineage) | Unified policies and tooling |

Cost and Scalability

| Feature | Data Lake | Data Warehouse | Lakehouse |

|---|---|---|---|

| Storage Cost | Low | High | Moderate |

| Compute Cost | Variable (pay-as-you-go) | Predictable | Efficient (on-demand) |

| Scalability | Virtually unlimited | Scalable | Highly scalable |

Summary Table for Quick Comparison

| Aspect | Data Lake | Data Warehouse | Lakehouse |

|---|---|---|---|

| Data Types | All | Structured | All |

| Schema | On-read | On-write | Both |

| Performance | Variable | High | High |

| Cost | Low | High | Moderate |

| Use Cases | ML, exploration | BI, reporting | Both |

| Security | Evolving | Mature | Unified |

| Governance | Challenging | Strong | Unified |

Conclusion

Data lakes and data warehouses serve distinct, vital roles in modern data architecture. Data lakes offer unmatched flexibility and scalability for raw, varied data — fueling innovation in data science and AI. Data warehouses deliver the performance, reliability, and governance needed for business intelligence and operational reporting.

The Data Lakehouse model is rapidly gaining traction. It provides a unified platform that supports both experimentation and enterprise-grade analytics. As organisations face ever-growing data volumes and complexity, the lakehouse approach offers a scalable, intelligent, and cost-effective solution.

Choosing the right architecture depends on:

- The types and sources of your data

- Your analytics and reporting needs

- Your team’s expertise and governance requirements

- Your budget and scalability goals

For most modern organisations, a hybrid lakehouse model is emerging as the new standard — delivering the best of both worlds for a future-proof data strategy.