Introduction to Big Data

Big Data has revolutionised businesses, providing unimaginable data-driven conclusions. The key to knowing big data is that data has to be efficiently administered, and since today’s digital businesses rely heavily on data-driven decisions, big data provides a powerful infrastructure, making it a crucial element in today’s digital economy.

What is Big Data?

Big Data is the massive data in structured and unstructured forms generated from human-generated content, machine-generated data, social media, IoT devices, public datasets, etc. Processing massive data requires technologies like Hadoop, Spark, and Cassandra.

Understanding the 5 Vs of Big Data

Classifying big is possible with five V’s i.e. volume, velocity, variety, veracity, and value. E.g., the Healthcare industry generates massive volumes of data in the form of patient test results and records. This rapid data generation every day is called velocity. Then, the data may vary, like structured, semi-structured, and unstructured data, termed variety. Veracity refers to the credibility and precision of the data. Working on this data leads to data-driven decision-making and better risk assessment, bringing value to the medical sector.

Why is Big Data Important in Today’s World?

Big Data optimises operations, enriches customer experiences, and improves decision-making. Big data analytics allow predictive modeling, fraud detection, and customised marketing in healthcare, finance, and retail. It can also be used in urban planning and policy-making.

What is Hadoop?

The crucial question is how do we overcome the challenge of big data to store and process massive data, and for that, there exist frameworks like Cassandra, Hadoop, and Spark. Let us take Hadoop as an example. Developed by Apache, Hadoop is an open-source framework that efficiently stores and processes massive data. Data in Hadoop is stored in a distributed file system (HDFS) to store big data. Distributed storage and parallel processing capabilities make it a preferred choice for handling Big Data challenges. Hadoop quickly addresses the challenge of the storage and processing of data. It can expand horizontally by adding more nodes, making it scalable, can replicate data across nodes to avoid data loss and, thus, is fault tolerant. It uses commodity hardware and can work with all operating systems, leading to cost effectiveness.

Want to learn by doing? Learn Big Data concepts with Data Science & Analytics training through hands-on projects and expert mentorship from Tutedude.

Key Components of Hadoop

Hadoop Distributed File System (HDFS) is the backbone of the Hadoop framework, serving as its primary storage system for managing and storing large datasets on commodity hardware. Parallel processing of breaking tasks into smaller tasks is conducted by MapReduce. Parallel processing makes it easy as instead of one machine doing the process, three machines are set up. Hadoop includes two key aspects: job tracker and task tracker.

The logic behind YARN (Yet Another Resource Negotiator) is to provide the resources required to store and process data, perform job scheduling i.e. which job will be executed by which system and support the variety of processing engines which can be easily stored in HDFS. It has a dynamic strategy of resource allocation i.e. it makes the best efficient use of the resources. The next component of Hadoop is Hadoop standard utilities. They are Java files, which support all the other components in Hadoop clusters. It can be a file or group of libraries that supports components inside the Hadoop cluster. It adds the computers to the Hadoop Network irrespective of its hardware or the operating system, making it convenient for any computer to get into the Hadoop network.

Hadoop Ecosystem: Tools & Technologies

Hadoop ecosystem comprises four main layers:

- Data Storage Layer

- Data Processing layer

- Data Access Layer

- Data Management Layer

Data Storage layer comprises of:

- HDFS is based on client server architecture and helps in storing, distributing and accessing large-scale data across hadoop clusters.

- Hbase is a no-sql database built above HDFS for fast record lookups in

a large table like Google’s Bigtable.

Data Processing layers contain MapReduce and Yarn.

- Mapreduce, which is a part of data processing, assists in cluster management. It’s a parallel data processing framework over the clusters that helps data seekers save time.

- Yarn serves multiple clients and performs security controls and is a resource manager.

The third layer is the data access layer with the following components:

- Hive facilitates the integration of SQL-based querying languages with Hadoop. It performs reading and writing of large datasets and supports real-time and batch processing.

- Pig is a data flow, so it serves the analysis of large data sets. It provides higher abstraction as compared to mapreduce.I

- Mahout is an open source library of machine learning writing ML algorithms.

- Avro is a schema-based serialisation language-independent technique

- Scoop (sql plus hadoop) is a command line interface that transfers data from RDBMS.

Data management layer:

- Oozie is a server-based workflow scheduler that helps schedule tasks and acts as a pipeline.

- Chukwa is built on top of HDFS and is a framework for collecting logs for monitoring.

- Mapreduce helps in parallel processing and breaking down large data into chunks. It is designed for monitoring, analyzing, and presenting the result.

- Flum is used to ingest data from multiple web servers

- Zookeeper is a hadoop management tool. It ensures the distributive file system acts as one for data distribution, management and transfer.

Setting Up Hadoop

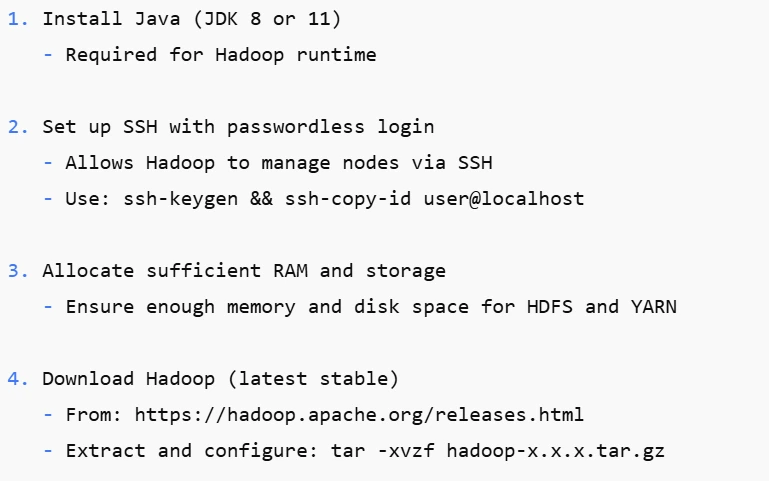

Prerequisites for Installing Hadoop

Step-by-Step Hadoop Installation Guide

To install Hadoop we can use a UNIX based environment (LINUX or Mac Os), as Hadoop is basically designed to work on Linux systems like Ubuntu, CentOS or Red hat.

Step1: Lets extract hadoop using following bash command:

tar -xvzf hadoop-x.y.z.tar.gz -C /usr/admin/

cd /usr/admin/hadoop

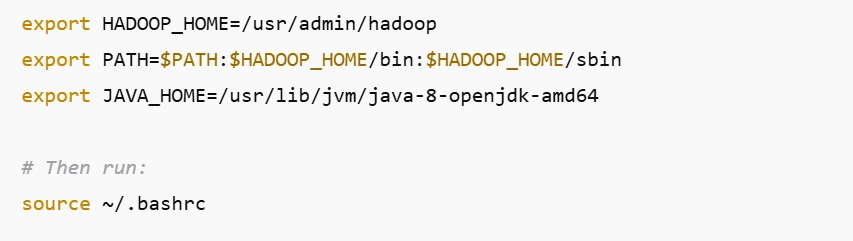

Step2: Configure environment variables in .bashrc or .profile, using following bash command:

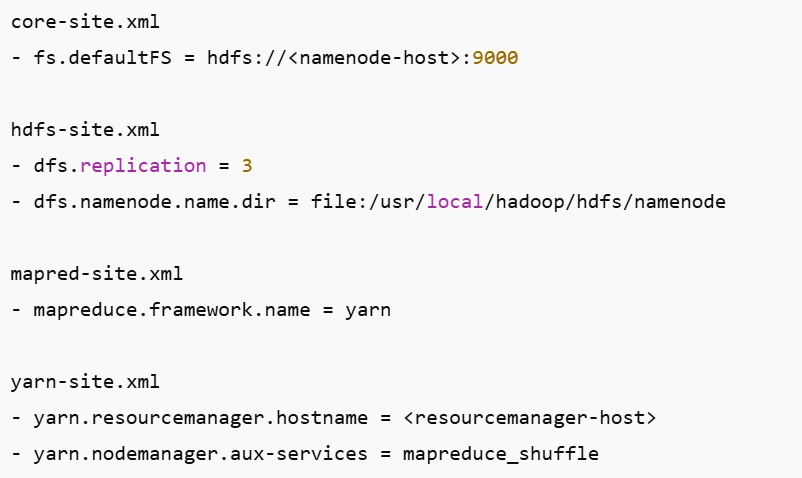

Step3: Edit Configuration Files:

Step4: Format the NameNode:

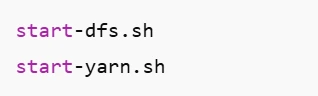

Step5: Start Services:

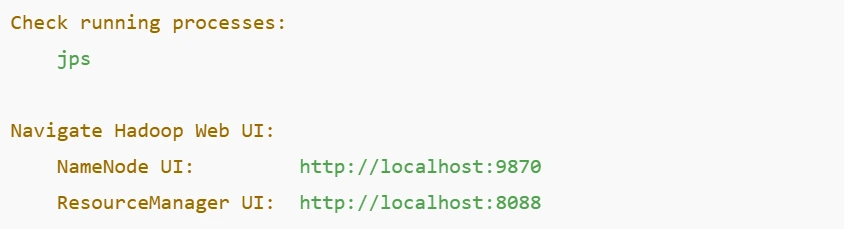

Step6: Check the Setup:

Basic Configuration Tips for Optimization

- Increase block size for large files (dfs.blocksize).

- Adjust the replication factor for reliability (dfs.replication).

- Tune memory allocation in yarn-site.xml.

- Enable log monitoring (tail -f logs/hadoop-*.log).

- Optimise MapReduce parallelism.

Working with Hadoop

Hadoop framework has HDFS and the map reduce framework. It divides and stores data in chunks in different machines called nodes. So the time taken for all parts of the data to be stored is the same as data is stored simultaneously on each machine. Hadoop also makes copies of data on different nodes, leading to high availability. It stores two copies in one rack and one copy in a different rack to reduce bandwidth. As the data is distributed, HDFS also handles networking involved in this efficiently.

Reading data:

Reading data requires that the client should have a Hadoop client library (a Java JAR file) and cluster configuration data to find the NameNode (it is the master node as Hadoop is master/slave file system). The client first contacts the name node, providing the name and location of the file. A name node authenticates the user and verifies its permissions and then provides the first block of the file along with data nodes storing copies of this file. The client contacts the closest data node and starts reading the data. The process stops when the client cancels the operations or all blocks are retrieved.

Writing data is a bit more complicated than reading data from HDFS.

With a file name and location, the client requests the namenode. Once the name node authenticates the user and verifies that it has all the permissions, it updates its metadata to include this file. Now, the name node opens a data stream, and the client can now start writing. The data gets stored in a queue in packets of five kilobytes. A thread is used to write the data in HDFS, and this thread contacts the name node to get a list of data nodes where the written file will be stored. Once the client gets connected to the first data note it starts transferring data and replicate data to other nodes. Data nodes keep sending acknowledgements to the client as they complete the writing on the HDFS. During a scenario of errors, like failure of a data node, the writing process automatically pauses and after the last successful acknowledgement, any data sent is sent back. Meanwhile the name node searches for a healthy data node and assigns a new block id and the writing task resumes.

Integrating Hadoop with Other Big Data Technologies

Hadoop can integrate with various technologies to enhance capabilities:

- Spark enhances memory processing, making computations faster than Hadoop’s MapReduce.

- Hive facilitates the integration of SQL-based querying languages with Hadoop, while HBase provides real-time NoSQL database support.

- Kafka and Flume both are used for real-time event processing systems.

- Sqoop efficiently transfers bulk data between Hadoop and structured datastores, allowing users to import data from databases to Hadoop HDFS and vice-versa.

- Moreover, Apache Drill and Impala can be integrated with interactive querying, whereas TensorFlow and MLlib support machine learning.

Industry Applications and Challenges

Hadoop plays a pivotal role across various industries by enabling data-driven decisions and real-time insights.

Use Cases of Hadoop in Different Industries

Healthcare: Predictive Analytics & Patient Insights

Healthcare data is highly significant, and Hadoop helps organisations analyse vast amounts of patient data for predictive analytics and early disease detection. It assists in real-time monitoring, personalised health treatments and helps in data-driven decision making. However, data privacy and medical compliances still remains a challenge.

Finance: Fraud Detection & Risk Management

Hadoop can help in detecting fraud and manage risks effectively in the financial sector. It can process massive volumes of financial data sets in real time, determining anomalies. Machine learning models built on Hadoop can also predict frauds. However, data security, maintaining compliance, and high speed transactions still remain key challenges.

Retail: Customer Personalization & Inventory Forecasting

Personalized recommendations and targeted marketing enhances customer experience. Hadoop analyzes customer’s historic data to provide insights about customer’s tastes, preferences, habits etc leading to higher sales. It helps in demand forecasting, optimize inventory and reduce overstocks. However, Hadoop with obsolete retail systems can pose a data security threat.

Common Challenges in Hadoop Implementation

Security & Privacy Concerns

Hadoop’s distributed character makes it prone to security threats causing unauthorized access to data, data breaches and cyber attacks. Its multi-tenant environment can cause data leaks. Thus ensuring data security and privacy remains a challenge.

Scalability & Performance Issues

Large data causes network congestion, performance issues, and limitation of hardware may lead to inefficient resource allocation impacting processing speed. Scalability and performance remains a persistent challenge managing large-scale deployments.

Conclusion

Hadoop remains the core of big data processing, giving unthinkable results especially in healthcare, finance, and retail. However, strategic solutions and proper planning is required to mitigate the challenges of data security, performance, scalability and privacy. The good news is with AI/ML, Hadoop can drive deeper insights from data, maintaining predictive analytics and automation. Hadoop’s role will continue to expand, shaping the future of data-driven enterprises.